On Wednesday, Google introduced GKE Autopilot.

Up to now, GKE has been one of those cloud products that sits in a grey area somewhere between IaaS and PaaS. It’s definitely much easier to run GKE than it is to roll your own Kubernetes cluster but neither are you completely free from managing infrastructure.

With Standard GKE, the Kubernetes control plane is fully managed but you are still required to create, upgrade and otherwise manage the GKE Node Pools. The node pools are the underlying compute instances that actually run your workloads.

GKE Autopilot changes all that and is a fully-managed offering. In short, it’s GCP’s answer to Fargate on EKS.

Costs

Whereas previously costs were calculated based on the underlying node pools instance sizes, now we have per-second billing using the compute, memory and disk resources used by your pods. Because Google is now managing the nodes, they are also offering an SLA on regional pods of 99.9%.

Security

As a fully managed service, Google automatically enable a bunch of opinionated security defaults such as Shielded VMs.

Clusters are configured to use GKE Workload Identity which links Kubernetes Services Accounts to Google Service Accounts. The allows pods to access other cloud resources, such as storage buckets and database, securely using a dedicated Google Service account with restricted IAM permissions.

More broadly, GKE Autopilot automatically implements the recommendations in the GKE Hardening Guidelines and we think that the fact GKE Autopilot enables this fast “production-ready deployment” will be a big selling point with enterprise customers.

A point to note is that GKE Autopilot does not make use of GKE Sandbox whereas Cloud Run does. The docs say, “[…] GKE recommends that customers with strong isolation needs, such as high-risk or untrusted workloads, run their workloads on GKE using GKE Sandbox to provide multi-layer security protection.” So GKE Standard or Cloud Run might be a better option if you have those strong isolation needs. (Credit: Thanks to Kat Traxler for pointing this out!)

Limitations

Kelsey Hightower says, “GKE Autopilot locks down parts of the Kubernetes API and enforces security policies which enables us to hide the nodes while enhancing security. That comes with tradeoffs. Some workloads won’t run. Please review the limitations.”

There are two main deal-breakers that I can see will be an issue for some users.

Firstly, you cannot create custom mutating admission webhooks for Autopilot clusters (you can create custom validating webhooks). The consequence of this is that you cannot install Istio either. This is a shame and I hope it’s something Google addresses soon.

Secondly, Kubernetes monitoring tooling require access that is restricted and therefore will not work. At launch, only monitoring from Datadog and CI/CD from Gitlab is supported.

Privileged Mode for containers is not allowed so that nodes are protected from changes. Neither can you SSH to your nodes or modify them in any way since they’re fully managed by Google.

It is possible to run Daemonsets though, something you cannot do with EKS on Fargate.

Demo

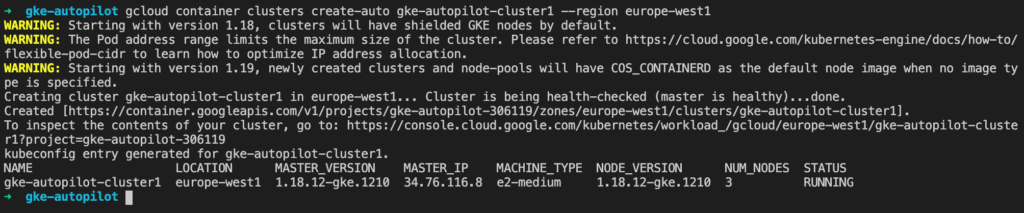

Creating a cluster using the CLI is easy. It deploys into the projects “default” VPC and automatically creates the subnets for pods and services in the background.

Note that by default we have a public cluster IP. However, you can also launch an Autopilot as a “private cluster” with no public IP for the control plane for a more secure configuration.

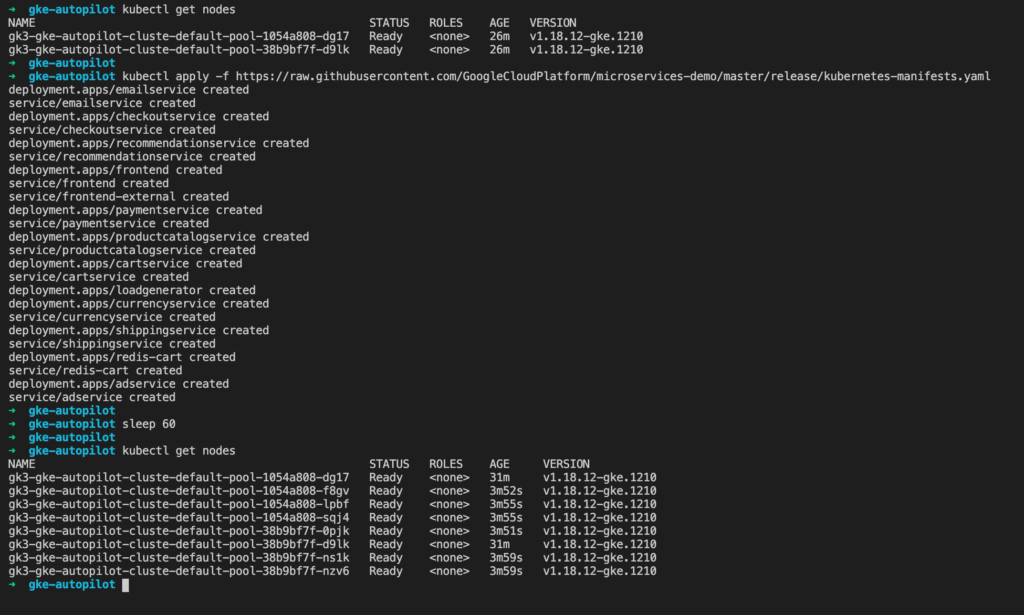

Below you can see the how I start with two nodes. After I deploy a microservices demo application then GKE provisions extra nodes to run my deployments and services.

As you can see, although Google is managing our nodes with Autopilot, we can see still them using “kubectl get nodes” and also describe them as normal. GKE Autopilot also supported pod affinity so that front-end pods can be colocated on the same node as backend pods.

It took a couple of minutes for the new nodes to appear and the pods to deploy but it worked smoothly.

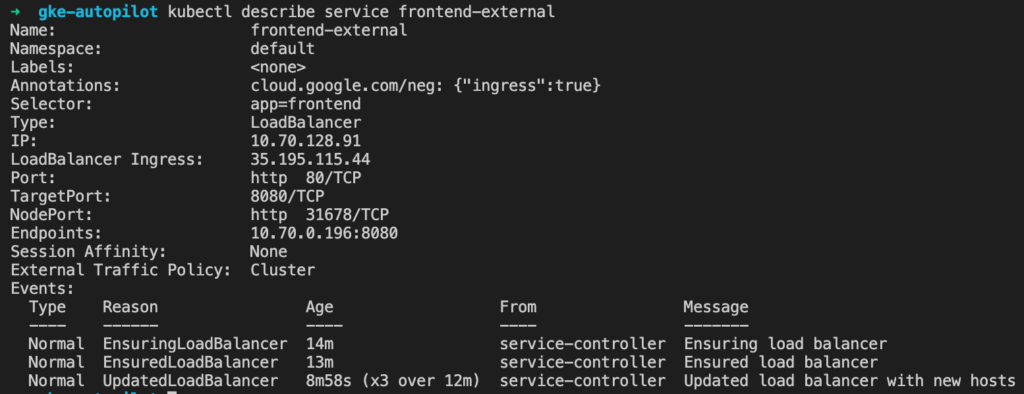

The Autopilot cluster also created an external HTTPS load-balancer so that our demo app is accessible from the public internet.

Conclusion

Users with very large clusters may decide it’s more economical to stick with Standard GKE. Others may want the flexibility or will want to wait for tools or features they need to become supported for GKE Autopilot.

However, for most users that want to just want to deploy their applications with minimum fuss, GKE Autopilot should become the default choice for Kubernetes workloads.

Get In Touch

If you want to know more about GKE, GKE Autopilot then please get in touch. MakeCloud is a Google Cloud partner, consultancy and managed services provider based in London.